1California Institute of Technology 2Google Research

1California Institute of Technology 2Google Research

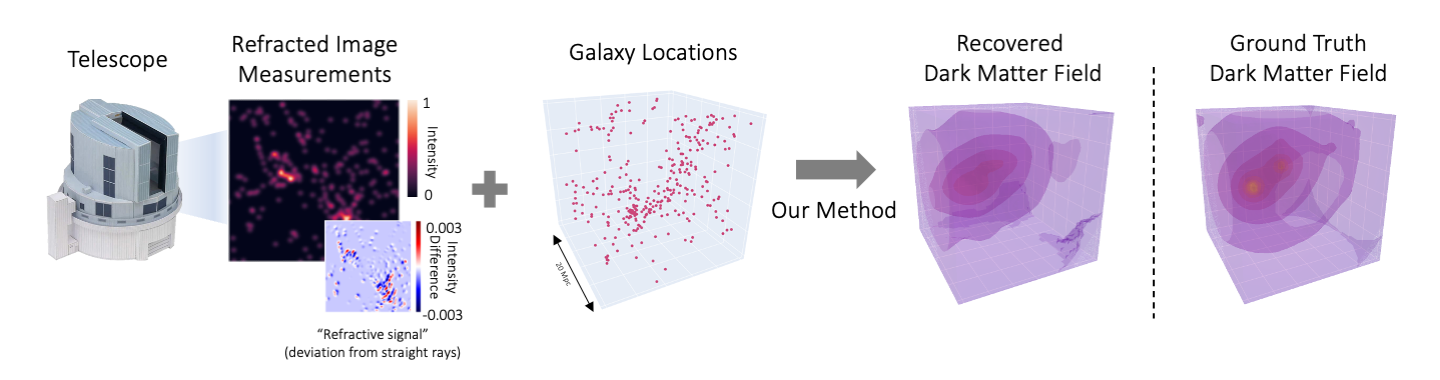

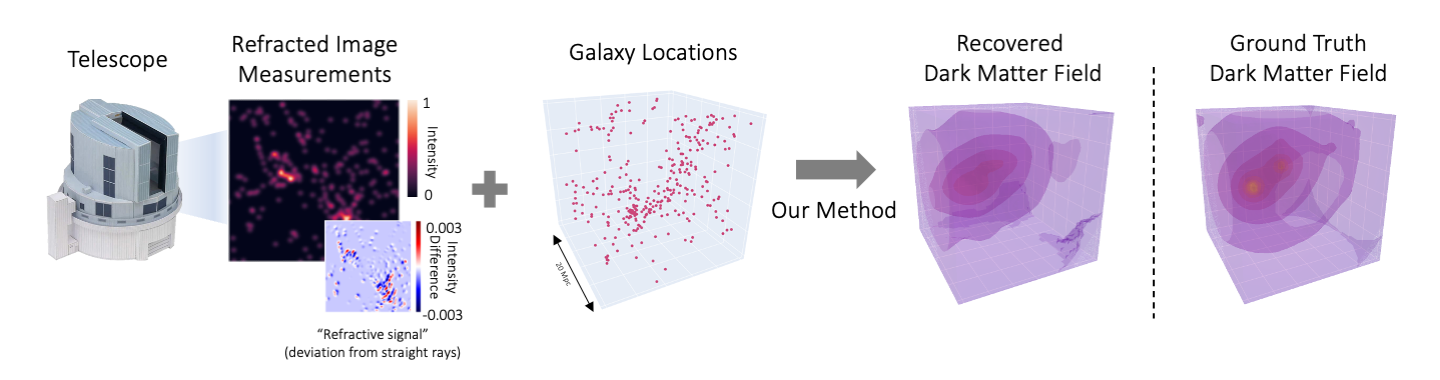

We formulate and present an approach for single-view refractive field reconstruction that explicitly models curved ray paths through a neural field. By leveraging spatial information and the emission profile of light sources located throughout the volume, we are able to localize refractive structures in 3D with just a single viewpoint. Here we demonstrate our approach on a simulation involving a realistic refractive field induced by simulated dark matter halos. Despite the large patches of space that are void of galaxy emitters, our method is still able to recover the rough structure of the 3D dark matter distribution from a single simulated telescope image of refracted galaxies. This reconstruction is also especially challenging because of the extremely weak degree of refraction, as seen in the relative scale of the intensity difference plot; the refractive effects of dark matter result in an intensity change of at most ±0.3% of the maximum intensity of the original image. This experiment uses a realistic dark matter distribution derived from IllustrisTNG, but we leave the inclusion of realistic measurement noise to future work.

Refractive Index Tomography is the inverse problem of reconstructing the continuously-varying 3D refractive index in a scene using 2D projected image measurements. Although a purely refractive field is not directly visible, it bends light rays as they travel through space, thus providing a signal for reconstruction. The effects of such fields appear in many scientific computer vision settings, ranging from refraction due to transparent cells in microscopy to the lensing of distant galaxies caused by dark matter in astrophysics. Reconstructing these fields is particularly difficult due to the complex nonlinear effects of the refractive field on observed images. Furthermore, while standard 3D reconstruction and tomography settings typically have access to observations of the scene from many viewpoints, many refractive index tomography problem settings only have access to images observed from a single viewpoint. We introduce a method that leverages prior knowledge of light sources scattered throughout the refractive medium to help disambiguate the single-view refractive index tomography problem. We differentiably trace curved rays through a neural field representation of the refractive field, and optimize its parameters to best reproduce the observed image. We demonstrate the efficacy of our approach by reconstructing simulated refractive fields, analyze the effects of light source distribution on the recovered field, and test our method on a simulated dark matter mapping problem where we successfully recover the 3D refractive field caused by a realistic dark matter distribution.

paper [pdf] code [Github] supplement [pdf]

@inproceedings{zhao2024single,

title={Single View Refractive Index Tomography with Neural Fields},

author={Zhao, Brandon and Levis, Aviad and Connor, Liam and Srinivasan, Pratul P and Bouman, Katherine L},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

year={2024}

}